It's not clobbering that's prevented (as the numeric suffixes were already preventing clobbering), but rather the multiple version saving that's prevented. Therefore, "no-clobber" is actually a misnomer in this mode.

#Wget powershell download

When -nc is specified, this behavior is suppressed, and Wget will refuse to download newer copies of file. If that file is downloaded yet again, the third copy will be named file.2, and so on. When running Wget without -N, -nc, or -r, downloading the same file in the same directory will result in the original copy of file being preserved and the second copy being named file.1. In certain cases, the local file will be clobbered, or overwritten, upon repeated download. If a file is downloaded more than once in the same directory, Wget's behavior depends on a few options, including -nc. The whole point of -nc is to prevent the actual download.

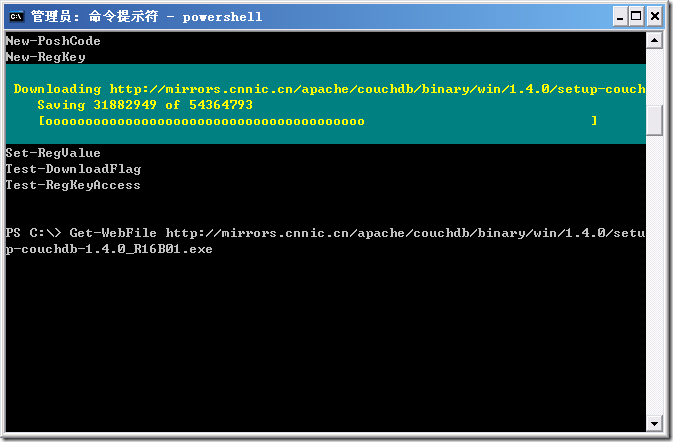

It also checks if the target file already exists and doesn't start a second download. The following script will crawl my blog () for a maximum total of 200 links, and won’t crawl pages that are more than 3 levels deeper that my blog’s home page.There is no Powershell switch which behaves exactly like -nc For simplicity’s sake, I have hardcoded the value in the top section of my script.Īs mentionned above, in my case, I have hardcoded the values of the parameters instead of prompting the user to enter them manually. Figures below show the execution of the script on my personal blog. Finally, my script will display some level of execution status out to the PowerShell console for every link crawled, it will display a new line containing information about the total number of links visited so far, the hierarchical level of the link (compared to the parent site), and the URL being visited. The script will also account for relative URLs, meaning that if a URL retrieved from a page’s content starts with ‘/’, the domain name of the parent page will be used to form an absolute link. The script will need to have a try/catch block to ensure no errors are thrown back to the user in the case where URLs retrieved from links are invalid (e.g. Using a combination of string methods, I will retrieve the URL of every link on the main page, and will call a recursive method that will repeat the same “link-retrieval” process on each link retrieved for the main page. Then, I will search through that content for anchor tags containing links (“ tags with the href property). Using the Invoke-WebRequest PowerShell cmdlet, I will retrieve the page’s HTML content and store it in a variable. My PowerShell script will start by making a request to the root site, based on the URL the user provided. The script should log pages that have been visited and ensure we don’t visit the same page more than once. This PowerShell script should prompt the user for their credentials, for the URL of the start site they wish to crawl, for the maximum number of links the script should visit before aborting, and last but none the least, the maximum level of pages in the architecture the crawler should visit. I have decided to develop a PowerShell script to mimic a user access various pages on the site and clicking on any links they find.

I have a need to simulate traffic after hours on one of our SharePoint sites to investigate a performance issues where the w3wp.exe process crashes after a few hours of activity.

0 kommentar(er)

0 kommentar(er)